MetaCOG: Enhancing AI Vision with Human-Inspired Metacognition

July 16, 2024

The human visual system, while remarkably sophisticated and generally reliable, is not immune to errors. These errors can manifest in various forms of optical illusions, such as the “anomalous motion” illusion1 above. In spite of such an error, most people can correctly infer that the image is not actually moving. This ability to distinguish appearance from reality can be crucial for knowing when to trust what we see and when to question it, including in everyday cases like when we see a mirage on the road.

The ability to distinguish what things are really like from how they seem (known as an appearance/reality distinction) requires going beyond building mental models of the outside world to building models of our own cognition. This is a form of metacognition–a higher-order process that enables the monitoring, evaluation, and regulation of other cognitive functions2. Metacognition can be applied to any cognitive domain, not just vision. For instance, people can recognize that they underestimate how long tasks take to complete, and then explicitly correct their intuitions to compensate for this bias. As another example, people can notice that they tend to be irritable and grouchy when they are hungry, and then have food to address the root cause of their bad mood rather than lashing out at people around them.

MetaCOG: More Robust Object Detection Through Metacognition

Just as the human visual system sometimes makes errors, object detectors–AI systems that infer the location of different types of objects in images–also make mistakes. For example, the GIF to the right shows a sequence of images of a scene and the outputs of a neural object detector for each image. Notice that the neural object detector makes mistakes in several images, including not detecting the TV, misclassifying the TV as a chair, and “hallucinating” an additional TV that is not there.

If an object detector experiences a visual illusion when deployed (e.g. as part of an autonomous driving system), it does not have the luxury of obtaining immediate human feedback. So it would be very beneficial to give object detectors the capacity to infer that they are experiencing a visual illusion (even if it’s of a very different kind than the ones people experience).

How could we do this? Consider again the first visual illusion above where a static image appears to be moving. As soon as you saw it, you might have naturally started moving your eyes around the image and fixating on different parts of it. While doing this, you might have noticed that, depending on where you look, different parts of the image move. Naturally, we know that’s not how the world works–our eye movements do not have the power to change the physical world–and we can conclude this is an artifact of our visual system. This is an instance of a strong prior that humans, and even infants, have that they can use to distinguish appearance from reality when their visual systems make mistakes.

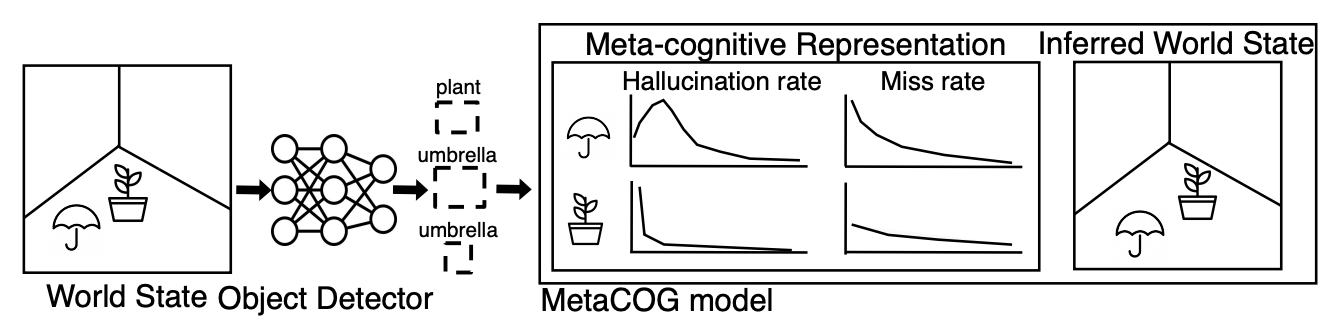

In recent work3 published at Uncertainty in Artificial Intelligence (UAI) 2024 and available here, Basis collaborated with Marlene Berke and colleagues at Yale to apply this human-inspired approach to correcting visual system errors to the problem of object detection. We developed MetaCOG, a probabilistic model that can learn a metacognitive model of an object detection system and use the model to improve the accuracy of the system.

To make this concrete, suppose you have a robot that is navigating a room and obtaining images of a scene from various positions and orientations. As it analyzes the images through a visual system, it will obtain snapshots of detections which may or may not be wrong. This is where MetaCOG comes in. MetaCOG takes these inputs and expresses them as a combination of (1) a world representation of what is actually out there, and (2) a representation of what the visual detector is seeing correctly, what it’s hallucinating, and what it’s missing. In this work, we show that this is a tractable problem if you leverage basic human knowledge about the way the world works known as the “Spelke principles”4: (1) objects persist through time and space, (2) two objects cannot be in the same place at the same time. The intuition is that, if you see objects flickering in and out of existence, you can identify something is wrong–that’s not how objects work. If you notice that the flickering is persistent in a particular region in space, you can begin to suspect something is actually there and you occasionally fail to detect it. If instead you see some detections flickering at random locations with no predictable structure of object motion, then it might mean the system is prone to hallucinations.

More precisely, MetaCOG is a probabilistic model that defines a joint distribution over: (1) the state of the environment in which an image is taken, (2) the position and orientation from which the image is taken, (3) the error rates of the object detector for different types of objects, and (4) the outputs of the object detector. This joint distribution is broken down into the product of: (1) a prior distribution over environment states, which incorporates the Spelke principles, (2) a prior distribution over the position and orientation from which an image is taken, (3) a prior distribution over the rates at which the object detector makes errors for different categories of objects, and (4) a conditional distribution over the outputs of the object detector given the true environment state, the position and orientation from which an image is taken, and the error rates. Given a set of images from different scenes, the outputs of the object detector for each of those images, and the position and orientation from which each image was captured, an AI system using the MetaCOG model can use Bayesian inference to update its beliefs about the true state of the environment and the error rates of the object detection system. In this way, the AI system using the MetaCOG model can: (1) improve its model of the object detector over time as it’s exposed to more and more data from different environments, and (2) use this model of the object detector and the Spelke principles to correct the outputs of the object detector when it makes mistakes. See the two figures below for a conceptual schematic of MetaCOG and an example of a sequence of images of a scene taken from different positions and the inferences MetaCOG makes about the types and locations of objects.

MetaCOG Significantly Improves Object Detection Accuracy

To evaluate MetaCOG’s performance, we asked (1) Can MetaCOG learn an accurate model of an object detection system without feedback?, and if so, (2) How much data does MetaCOG need to learn this model?, and (3) Does learning this metacognitive model enable MetaCOG to achieve higher object detection accuracy than the base object detector? Concerning the first and second questions, we found that MetaCOG can learn accurate models of object detection systems from a small amount of data, with the error rates inferred by MetaCOG quickly converging to the true error rates as measured by mean squared error. Concerning the second question, we found that our implementation of MetaCOG resulted in performance gains of 10 to 20 percentage points relative to the baseline object detector. This improvement in accuracy is especially remarkable, given that it was obtained through an unsupervised process, leveraging only a small set of prior knowledge.

The fact that MetaCOG can identify when an object detector makes errors has an unexpected benefit. By collecting the frames where MetaCOG flags that an object has been missed or hallucinated, MetaCOG is automatically creating a dataset of the cases where the object detector fails, which can then feed back into the object detector for fine tuning. We found that retraining a base object detector using this data substantially improved the performance of the base object detector. This result is significant because it means MetaCOG could be used to generate useful training data without human oversight. This is useful because generating training data using humans can be expensive. Additionally, for some applications where the speed and efficiency of object detection systems are important, it may be preferable to use MetaCOG to improve the performance of the base object detector offline, and then use just the improved base object detector, which is likely faster and more computationally efficient than MetaCOG, in production.

Future Directions

In this work, we demonstrated the potential for AI systems to learn metacognitive models and use these models to improve their performance with minimal human intervention. Our work joins a growing trend of research developing metacognitive algorithms to improve the performance of AI systems. For example, the AlphaGo system, which achieved a milestone in AI by defeating the world’s top Go player in 2016, used a metacognitive algorithm (Monte Carlo Tree Search) to help it decide where to focus its computational resources when evaluating different possible moves5.

Outside of computer vision, MetaCOG’s approach of using human knowledge to learn metacognitive models in an unsupervised manner could be applied to a wide range of important problems. For example, scientists and engineers often need to make judgments about when they can and can’t rely on different scientific models. Some scientific models, like quantum mechanics, are very accurate but are computationally intractable to use directly for most practical problems. In contrast, other scientific models, like the coarse-grained approximations of quantum mechanics used in chemistry and materials science, are more computationally tractable but less accurate than more computationally intensive models. Part of the art of science and engineering is determining when you can rely on different types of models and how to trade off computational complexity against accuracy and granularity. It may be possible to use approaches similar to MetaCOG to learn metacognitive models of scientific models. This could: (1) help scientists develop a more systematic understanding of when it makes sense to utilize different models, and (2) facilitate the development of AI systems that can effectively leverage and extend scientific knowledge.

Contributors

Research: Marlene Berke, Zhangir Azerbayev, Mario Belledonne, Zenna Tavares, Julian Jara-Ettinger

Article: Ravi Deedwania, Julian Jara-Ettinger

Acknowledgments: Marlene Berke, Karen Schroeder and Zenna Tavares for comments and feedback on this article.

References

P. Nasca, “An anomalous-motion optical illusion,” Wikipedia (cc by-sa 3.0) ↩︎

T. O. Nelson, “Metamemory: A theoretical framework and new findings,” in Psychology of learning and motivation, vol. 26, Elsevier, 1990, pp. 125–173. ↩︎

M. Berke, Z. Azerbayev, M. Belledonne, Z. Tavares, and J. Jara-Ettinger, “MetaCOG: A Heirarchical Probabilistic Model for Learning Meta-Cognitive Visual Representations,” presented at the The 40th Conference on Uncertainty in Artificial Intelligence, Apr. 2024. ↩︎

E. S. Spelke and K. D. Kinzler, “Core knowledge,” Developmental science, vol. 10, no. 1, pp. 89–96, 2007. ↩︎

D. Silver et al., “Mastering the game of Go with deep neural networks and tree search,” Nature, vol. 529, pp. 484–489, Jan. 2016. ↩︎